Turn Ideas into Income: Create AI-Driven Content Pipelines with Local LLMs

The rise of Large Language Models (LLMs) has completely changed how we create and distribute content. From blog posts to documentation, tutorials to newsletters, what used to take hours can now be done in minutes. But even better—you can now turn this content into income with ease.

And you don't need expensive API access, constant internet, or cloud subscriptions to do it. Thanks to tools like Ollama, you can run powerful models locally on your own machine—automating entire AI-driven content pipelines that are fast, private, and monetizable.

Why Local LLMs for Monetized Content Creation?

If you're a creator, technical writer, or developer, here's why using local models is a game changer:

No API or SaaS costs: Keep your production pipeline cost-free.

Private by default: Ideal for client projects, enterprise documentation, or sensitive data.

Offline-ready: Create anywhere—on a plane, off the grid, or in a secure environment.

Custom workflows: Tailor agents to your niche and audience for maximum output.

With local LLMs, you're not just creating faster—you’re building a sustainable, monetizable system without handing over your content (or money) to third-party services.

While writing this blog post, I realized it’s critical to highlight specific high-value technical use cases where AI-driven content pipelines deliver outsized returns. These aren’t just generic ‘content mills’—they’re specialized workflows that solve real problems for technical teams, enterprises, and developers.

Technical Use Cases (High Value for Enterprises & Dev Teams)

The combination of CrewAI + Local LLMs enables powerful workflows for technical teams, including:

1. Auto-Generate Documentation from Code

Perfect for:

SDKs, APIs, and internal tools

Rapidly producing or updating docs during development

2. Instant Developer Onboarding Guides

Build:

Quickstart pages

Architecture overviews

Dev environment setup instructions

3. Hands-On Lab Content for Frameworks

Especially useful in:

Internal developer training

Custom software demos for clients

Corporate learning platforms

4. AI Agents for Internal Knowledge Mining

Turn scattered internal documents into structured wiki pages

Use for IT support, DevOps runbooks, or security policies

These workflows aren’t just time-savers—they directly impact operational efficiency, reduce human error, and create billable deliverables for technical consultants or agencies.

On the other hand, as a self entrepreneur these above use cases can also be packaged as services you offer to different companies—boosting your monetization potential.

Cut a long history short, let's dive into a step-by-step guide.

Step-by-Step: Build Your First Local AI Content Engine

Even if you're a complete beginner, it takes only a few tools to get started:

Toolkit:

Ollama – Run models like LLaMA 3, Mistral, and Gemma locally.

CrewAI – Create AI agents that collaborate (e.g., writer, editor, researcher).

Markdown Editor / Notion / Obsidian – For writing and formatting.

Your machine – Ideally with 16GB+ RAM and modern CPU/GPU.

Quick Workflow Example:

Ask an agent to generate blog post ideas in your niche.

Pass the idea to a Writer Agent to draft the post.

An Editor Agent reviews and improves tone.

Export to your platform of choice: Medium, Substack, Dev.to, etc.

This setup is almost zero cost to run and you can use on your local workstation.

How to Make Money from AI-Generated Content

Once your content pipeline is running, here are real ways to monetize:

1. Publish Paid Newsletters

Use local LLMs to create consistent, high-value content.

Platforms like Substack let you monetize with paid tiers.

Bonus: Create multiple newsletters for different niches.

2. Freelance Documentation & Tech Writing

Use CrewAI to auto-generate:

Developer docs

Onboarding guides

Product manuals

Offer it as a service on Fiverr, Upwork, or directly to companies.

3. Create and Sell Digital Products

Tutorials, eBooks, or guides powered by your AI pipeline.

Use Gumroad or LemonSqueezy for easy delivery.

Automate production so you can scale effortlessly.

4. Build Micro SaaS / Tools

Offer internal documentation-as-a-service or tutorial builders powered by CrewAI.

Monetize via subscriptions or one-off access.

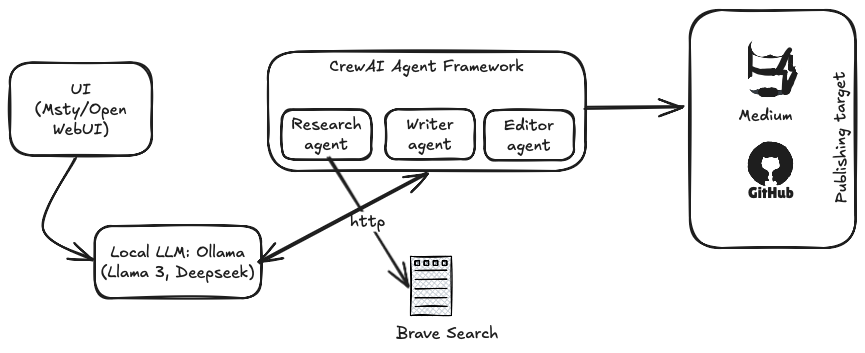

AI-Driven Content Pipeline Architecture

Building your own AI-powered content pipeline may sound complex, but with the right tools, it becomes surprisingly lightweight and manageable—especially when everything runs locally. This kind of setup gives you full control, zero recurring costs, and no dependency on the cloud or external APIs.

Let’s walk through what this architecture looks like:

UI Layer: Msty/OpenWeb UI

Msty is a beautiful and lightweight UI for interacting with local LLMs via Ollama. It allows you to:

Run models like LLaMA3 or Mistral in a chat-style interface

Test agent behaviors from CrewAI visually

Share or export generated outputs

Keep everything local—no internet required

For content creators who prefer a graphical interface over command line tools, Msty adds ease-of-use and accessibility.

Instruction to install local LLM: First step to the world of AI: installing a local LLM

Agent Layer: Powered by CrewAI

Here, agents like:

Researcher Agent: Finds topic summaries or keywords

Writer Agent: Generates blog drafts or documentation

Editor Agent: Refines tone, checks grammar, applies SEO

QA Agent (optional): Reviews consistency or checks technical accuracy

These agents collaborate in a multi-step process, passing prompts and outputs to each other. This is the “pipeline” where actual automation magic happens.

Why CrewAI?

Because, CrewAI lets you build role-based AI teams—just like assigning a writer, editor, and researcher in a real-world content team. Each agent has a specific job and works together automatically to create high-quality content.

You don’t need deep Python skills to use it—just basic setup and a simple config file to define who does what. It works seamlessly with local LLMs like Ollama, so everything runs on your own machine, privately and without API costs.

It’s the easiest way to turn your ideas into structured contents—no coding expertise required.

Complete example of developing AI Pipeline: See the Jupyter notebook in chapter 7.

Output Layer: Ready to Publish

After the AI agents complete their tasks, the result can be exported to:

Markdown for static site generators (Jekyll, Hugo)

HTML for landing pages

PDF/Word for client delivery

Or directly copied to platforms like Substack, Medium, or Dev.to

This output is not only fast to generate but high-quality enough to monetize or use professionally.

Anyway, If you’re interested in AI using local LLMs, don’t miss our book Generative AI with local LLM for more in-depth information.

Pro Tip: Choose a Profitable Niche

If you're monetizing, don’t just create randomly. Use your AI agents to:

Analyze market trends

Find SEO keywords

Build content clusters that target high-paying audiences (e.g., SaaS, DevOps, cybersecurity)

The more targeted your niche, the more valuable your content.

Final Thoughts

AI isn’t just a writing assistant—it’s your business partner. With a few simple tools, you can:

Build AI-driven content pipelines on your laptop

Keep everything private and cost-free

Automate output at scale

Monetize through newsletters, client work, digital products, or services

Start today—and turn your ideas into income using the power of local LLMs.