A Few Ways to Bring AI with Local LLMs into Google Spreadsheets

The era of smart spreadsheets is here—and you don’t need to rely on cloud APIs or premium subscriptions to make it happen. With local Large Language Models (LLMs) running through Ollama and some clever plumbing, you can bring powerful AI features right into your Google Sheets—all without sending data to the cloud.

In this post, we’ll walk through how to:

Run a local LLM using Ollama

Connect it to Google Sheets

Use tools like ngrok or cloudflared to expose the local service

Send prompts from a spreadsheet and get real-time AI responses directly in cells

Let’s get started from the use cases.

Use cases:

Analyze sales data or customer feedback using LLM-powered analysis.

Analyze mathematical test results – e.g., automatically calculate and interpret performance metrics from mathematical assessments or exams. My favorite ;-) I often uses this use case to check my son mathematical assessments (It doesn't mean that I do not know high school Algebra).

Summarize long text in a cell.

Generate product descriptions.

Translate phrases locally.

Generate formulas or regex expressions.

Note that, Google now offers built-in AI features in Google Sheets via the Gemini for Workspace integration. These features allow you to:

Use smart fill and smart formula suggestions

Generate content with “Help me organize”

Get AI-powered summaries and insights

So why bother with a local LLM setup? Because, with local LLM:

Data Privacy: Runs entirely on your machine, data will not sending to any cloud service.

Cost: Free with local resources. Don't requires Workspace subscription or API fees.

Customization: Fully customizable prompts, models, logic

Model Choice: You can use LLaMA, Mistral, Code LLaMA, etc. Not bound with Gemini only.

So, I think it perfect solution for developers, researchers, and privacy-conscious users.

After a quick Google search, I found a comprehensive set of Python libraries and Google Apps Script solutions that can bring AI into Google Sheets:

ollama-py GitHub Repository

Among these, the second approach offers a simple and smooth integration, allowing you to quickly and efficiently use a local LLM with Google Sheets. In this post, we’ve chosen to follow this method for integrating a local LLM with Google Sheets, focusing on analyzing mathematical test results.

Prerequisites:

To follow along, you’ll need:

A computer with Ollama installed

A Google account and access to Google Sheets

You can use either ngrok or cloudflared to tunnel your local server. Alternatively, if supported by your router (such as a Kennetic), you can set up tunneling directly through your Wi-Fi router.

Optional:

Python and a few libraries.

Note that ngrok requires an API key to use its cloud service, whereas cloudflared can be used without the need for an API key.So, let's get started.

Step 1: Install and Run Ollama.

First, install Ollama on your local machine if you haven’t already from the following link.

Then, pull and start a model (e.g., LLaMA 3.1):

ollama pull llama3

ollama run llama3This runs a REST API on http://localhost:11434 by default.

If you're having trouble getting Ollama up and running locally, please check my previous post here. If you're interested in using local LLMs for various purposes, I highly recommend taking a look at my book, Generative AI with local LLM.

Step 2: Expose the Local Ollama Using ngrok or cloudflared.

Because Google Sheets can’t access localhost, you’ll need to tunnel the server. This allows you to access your locally running services (such as a local server, app, or API) securely from anywhere on the internet without exposing your system to potential threats.

Personally, I uses Kennetic cloud to expose my App or URL on the internet. It requires networking setups, such as opening ports in the firewall and dealing with dynamic IP addresses. You can see the Keenetic router documentation for details.However, Cloudflared is a powerful tool for securely exposing your local server or application to the internet by creating a secure tunnel. It works in a similar way to ngrok but has its unique advantages, especially when it comes to ease of use and setup. Here’s why and how you might use cloudflared:

Installing on MacOS:

brew install cloudflaredCheck the version:

cloudflared --versionThe output should be something similar "cloudflared version 2025.4.2 (built 2025-04-30T13:15:20Z)"

Create the tunnel:

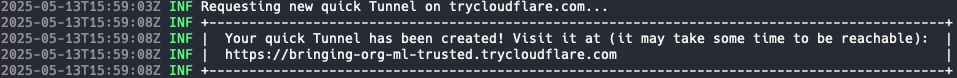

cloudflared tunnel --url http://127.0.0.1:11434 --http-host-header "localhost:11434"It will create a tunnel, making your local Ollama instance accessible through a unique public URL.

Note that, each time you restart the cloudflared tunnel, it will create a new unique URL for the outside world. The setup should be very similar as shown below:

Step 3: Connect Google Sheets to Your Local LLM

Now that your API is public, you can call it from Google Sheets using Apps Script.

Go to your Google Sheet:

Click Extensions > Apps Script

Paste the following code:

const URL_CELL = 'Settings!A1'; // base tunnel URL

const MODEL_CELL = 'Settings!A2'; // cell that holds the model name

function OLLAMA(prompt, model) {

const ss = SpreadsheetApp.getActiveSpreadsheet();

let base = ss.getRange(URL_CELL).getValue().toString().trim();

let mdl = model || ss.getRange(MODEL_CELL).getValue().toString().trim(); // ← one new line

if (!base) throw new Error('Missing tunnel URL in ' + URL_CELL);

if (!mdl) throw new Error('Missing model name in ' + MODEL_CELL);

if (base.endsWith('/')) base = base.slice(0, -1); // strip trailing /

const url = base + '/api/generate';

const r = UrlFetchApp.fetch(url, {

method : 'post',

contentType : 'application/json',

payload : JSON.stringify({ model: mdl, prompt, stream: false })

});

return JSON.parse(r.getContentText()).response;

}Save the script.

Create a new Google sheet named Settings with the following informations:

Settings!A1: Paste your Cloudflare URL (https://....trycloudflare.com)

Settings!A2: Paste model name (

llama 3.2 or gemma3:1b) which one you pull and run on step 1.

Now, run the App script from the previous step. It will execute the script and you are ready to go.

Let's create a new Sheet and add some data and analyze them through local LLM.

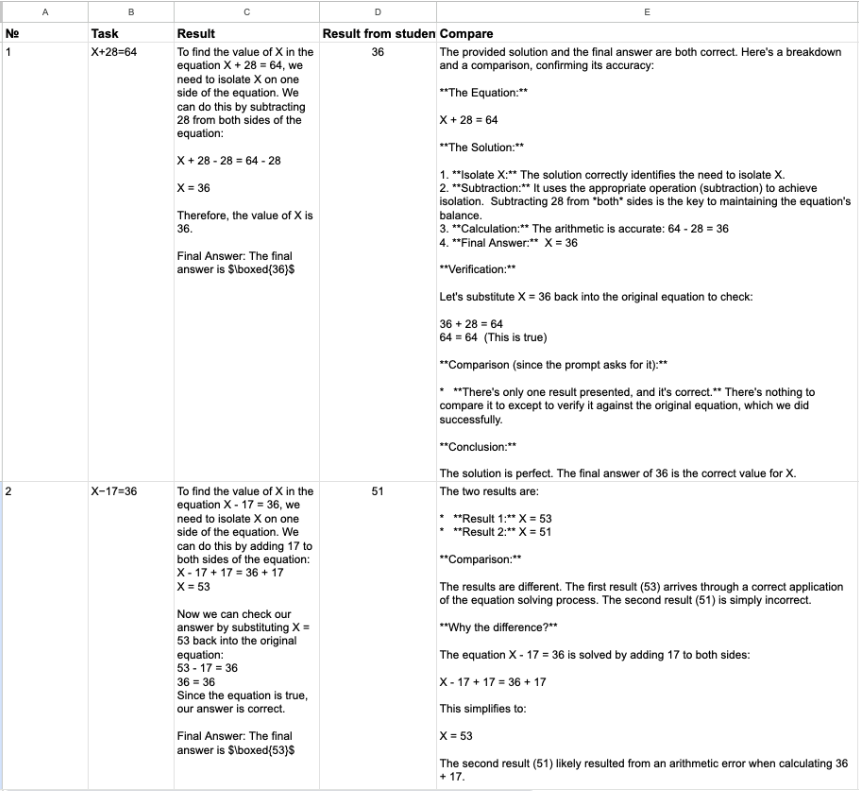

I’ll use a few math problems from my son’s homework to evaluate how the local LLM interprets and scores the results.

Task 1:

Column B: X+28=64

Column C [ Formula]: =OLLAMA("Find the value of X in equation" &B2)

Column D [ Result from the Student]: 36

Column E [Compare] : =OLLAMA("Compare the two results" &C2 &D2)

Task 2:

Column B: X−17=36

Column C [ Formula]: =OLLAMA("Find the value of X in equation" &B2)

Column D [ Result from the Student]: 51

Column E [Compare] : =OLLAMA("Compare the two results" &C2 &D2)

And save the sheet ;-)

After a while you should get the response from the LLM running on Ollama as shown below:

Here, what's happened under the hood. The formula [=OLLAMA()] is used inside Google Sheets Cell and sends a prompt to a local LLM (like one running through Ollama) to solve a math problem. Let’s break it down:

OLLAMA(): This is a custom function (defined via Google Apps Script) that connects your spreadsheet to a local LLM server via HTTP. When you callOLLAMA(...), it sends the provided prompt to the LLM and returns its response into the cell."Find the value of X in equation": This is a static string. It's the first part of the question you're asking the LLM.& B2: The&operator concatenates (joins) the static string with the contents of cellB2. So ifB2contains something like3x + 5 = 20, the full prompt becomes:

"Find the value of X in equation 3x + 5 = 20"

The result: the local LLM receives that full sentence and replies with the computed value of X, which is then displayed directly in the Google Sheet.

=OLLAMA("Compare the two results" & C2 & D2)This formula sends a prompt to a local LLM via the custom OLLAMA() function, asking it to compare the contents of two cells.

Drawbacks: I found that making more than 20 concurrent OLLAMA function calls to the local LLM often results in timeout errors.So easy.

With these tools, you can integrate powerful local AI into one of the most widely used productivity apps: spreadsheets. Whether you’re building an internal tool, automating content, or just experimenting with LLMs, this setup offers you unmatched flexibility and privacy.

All the credit's goes to the creators of the project Ollama.py and Deepcharts.

Have fun turning your spreadsheets into AI-powered workspaces—on your own terms.