Model Context Protocol (MCP): Connecting Local LLMs to Various Data Sources

Anthropic launched the Model Context Protocol (MCP) in November 2024 — an open standard for data exchange between LLMs and various data sources. The protocol provides a simplified way for LLMs to integrate with tools and services to perform tasks such as searching files on local systems, accessing GitHub repositories to edit files, and streamlining interactions with external platforms.

From the beginning, the MCP protocol was supported by the Claude Desktop app, and within a few months, several applications like Cline and Cursor AI adopted it to integrate with popular enterprise systems such as Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer. If you follow AI events or newsletters, you’ve likely come across numerous articles and vlogs about the MCP protocol and its use with the Claude Desktop app.

One interesting aspect of the protocol is that any LLM with function-calling support such as Ollama or Qwen can be used with it. This sparked my curiosity to experiment with the protocol on my local LLM running on home servers. There are also a few open-source projects on GitHub that enable the use of MCP with locally hosted LLMs.

Since I am not a Python specialist or a data scientist, it took me a few hours to work through setting up the MCP protocol with a local LLM. I strongly believe that sharing my experience will save others a few hours of their time.

So, in this blog post, I will share my experience of using the MCP protocol with a local LLM.

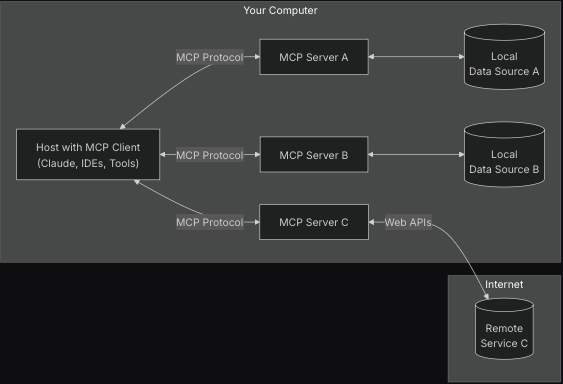

Let's start from the general architecture. As it is explained on the documentation, MCP follows a client-server architecture where Host application can connected to multiple server.

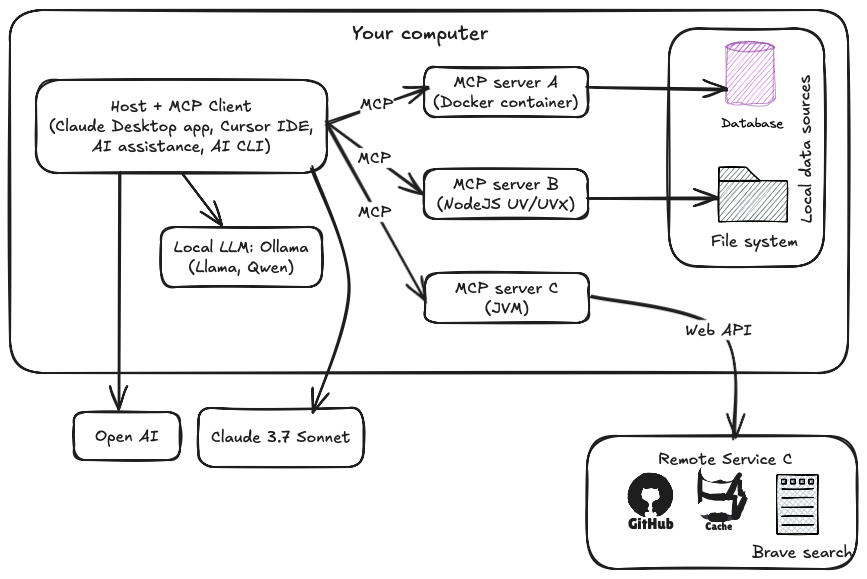

The image was taken from the MCP site. At first glance, the architecture appears to be very high-level, making it unclear where the LLM or AI model is located. So, I would like to break down the architecture in more detail to explain all the nuts and bolts.

MCP Host:

An application that can access services through the MCP protocol. This could be the Claude Desktop app, an AI agent/CLI, or the Cursor IDE. Such applications also use an LLM (local or remote) to perform various tasks.

MCP Client:

A client integrated with the host application to connect with the MCP server.

MCP Server:

An application or program that exposes specific capabilities through the MCP protocol. The server can run on Docker containers, JVM, or Node.js (UV/UVX) processes. The MCP community also provides pre-built servers for performing different tasks.

Local Data Sources:

Databases or file systems located on your local machine.

Remote Services:

External resources, such as GitHub or Brave Search, accessible through web APIs.

This is sufficient unless you plan to develop your own MCP client or server. Fortunately, the MCP community provides all the necessary documentation and SDKs to help you get started quickly with the protocol.

As mentioned earlier, I will experiment with my local LLM running on Ollama using MCP. For this example, I will use qwen2.5:7b as my local LLM, but you can use any LLM that supports function calling. Note that reasoning models like Deepseek are not compatible with MCP.

After a quick search, I found a few GitHub projects that enable connecting local LLMs (via Ollama) to Model Context Protocol (MCP) servers.

1. MCP-LLM Bridge:

A promising project that connects local LLMs to MCP servers. Its main feature is dynamic tool routing, allowing flexible interaction with different services. However, it has a few disadvantages:

Lack of documentation

Incompatible with native MCP server configurations: You must modify the configuration and provide the physical path to the MCP server configs. On macOS, additional permissions are required to execute TypeScript files.

2. mcp-cli:

A lightweight command-line application for connecting to MCP servers. It supports local LLMs through Ollama and OpenAI and is written in pure Python. The main disadvantage is the lack of tool routing capabilities.

The mcp-cli project also supports all MCP servers provided by the community, regardless of whether they run on Docker or UVX executions. Therefore, in the rest of this post, I will use mcp-cli to connect my local LLM to MCP servers.

UV is a Python package and project manager, written in Rust, that serves as an alternative to Pip. The uvx command allows you to invoke a python package tool without needing to install it.Requirements:

Local LLM with Ollama: Llama, Qwen

Node.js (if you need to run MCP servers through NPX)

Python >= 3.8

Docker (optional, if you want to run MCP server on docker)

Let's get started.

Step 1. Clone the repository.

git clone https://github.com/chrishayuk/mcp-cli

cd mcp-cliStep 2. Install UV which will be used to run the MCP servers.

pip install uv

uv sync --reinstallThe project provides two different modes: Chat and Interactive. I will go through the chat mode.

After cloning the project, open the server_config.json file in text editor.

{

"mcpServers": {

"sqlite": {

"command": "uvx",

"args": ["mcp-server-sqlite", "--db-path", "test.db"]

},

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/Users/christopherhay/chris-source/chuk-test-code/fibonacci"

]

}

}

}Here, two MCP servers are already provided: SQLite and Filesystem.

MCP Server "sqlite": This will run through UVX and doesn’t require any extra configuration.

MCP Server "filesystem": This will run through

npx(Node.js package execution). You need to replace/Users/christopherhay/chris-source/chuk-test-code/fibonacciwith your local filesystem directory, which will be made available through the tool.

Step 3. Run your Ollama inference.

ollama run qwen2.5:7bStep 4. Start the mcp-cli on chat mode.

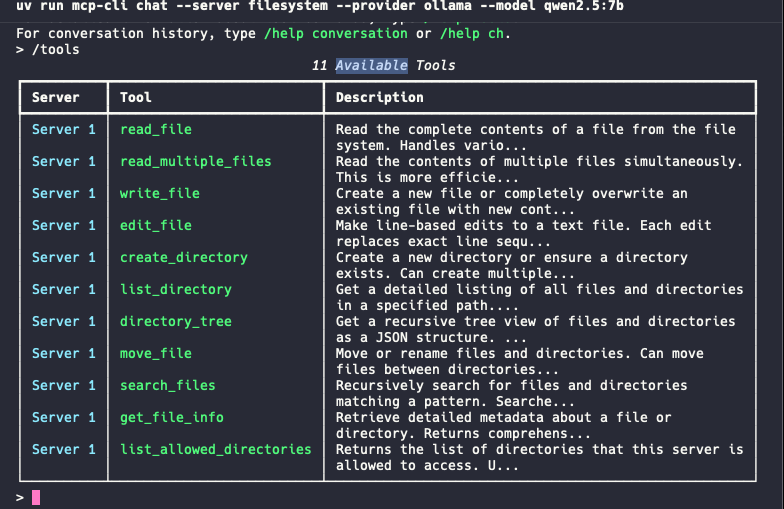

uv run mcp-cli chat --server filesystem --provider ollama --model qwen2.5:7bEnter the command /tools, and you should see a list of available tools from the Filesystem MCP server.

Enter any command like "give me a list of files from the directory Downloads" and play around it.

Step 5. Add a few MCP servers.

Clone the project from Github.

git clone https://github.com/modelcontextprotocol/serversThe project contains all the pre built MCP servers to plugin. We will try only two of them: brave-serach and fetch.

Brave-search. An MCP server implementation that integrates the Brave Search API, providing both web and local search capabilities. We can search latest news, articles and looking for near by restaurant through this service. So, lets try to integrate with the service with local llm. For using the service you have to Sign up and generate a API key.

The MCP server provides on two flavour: Docker, NPX.

For NPX add the following configuration into the server_config.json file.

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "YOUR_API_KEY_HERE"

}

}I am a Docker lover, so will try the following. Build the image

docker build -t mcp/brave-search:latest -f src/brave-search/Dockerfile .Add the following configuration:

"brave-search": {

"command": "/usr/local/bin/docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"BRAVE_API_KEY",

"mcp/brave-search"

],

"env": {

"BRAVE_API_KEY": "YOUR_API_KEY_HERE"

}

}next, run the brave-search with the CLI.

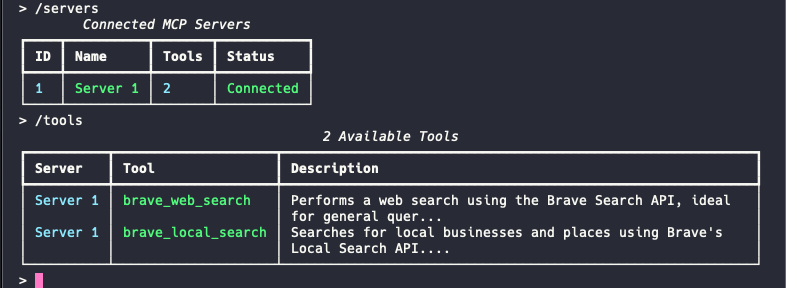

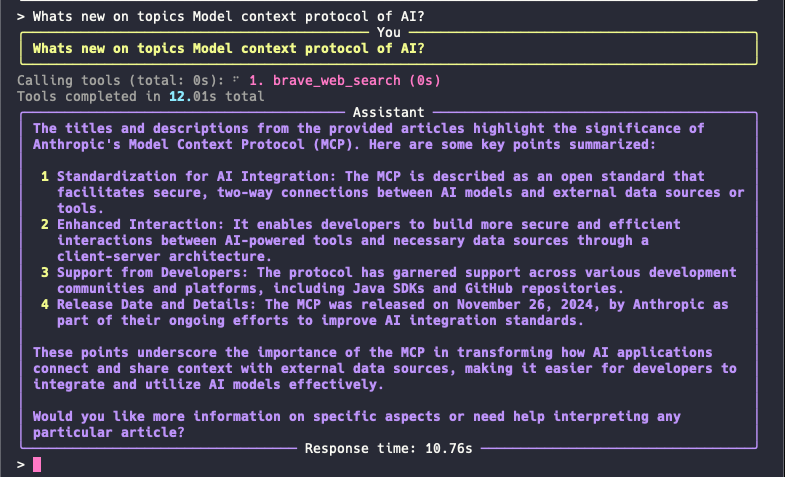

uv run mcp-cli chat --server brave-search --provider ollama --model qwen2.5:7b

Enter the following command

Whats new on topics Model context protocol of AI?The answer should something similar as shown below:

Step 6. Lets try the Fetch MCP server.

Fetch MCP server provides web content fetching capabilities which can be than summarized by the LLM.

Add the following configuration:

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}And run cli and the Server.

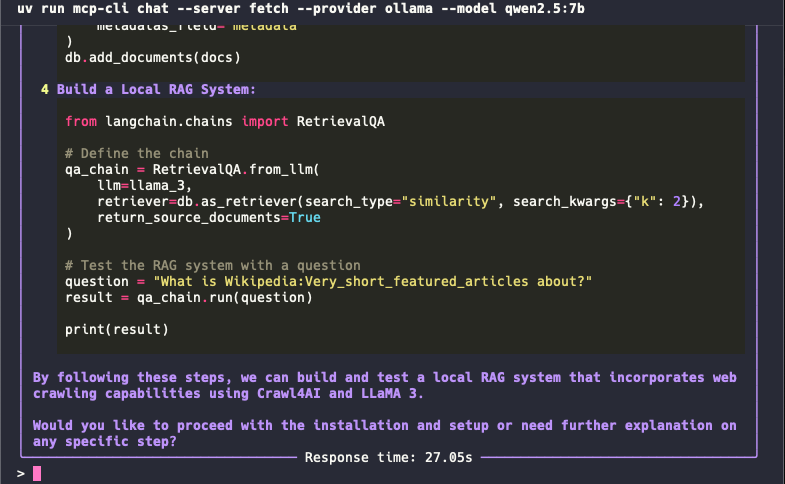

uv run mcp-cli chat --server fetch --provider ollama --model qwen2.5:7bAfter running the CLI, enter a prompt like this

fetch the content from the page "https://www.shamimbhuiyan.ru/blogs/web-crawling-for-rag-wi

th-crawlai" and give me a summary of the pageAfter a few moments, you should get a content and summary of the page as shown below:

That’s probably enough for today. Feel free to play around with other MCP servers to see how they work. The MCP community also provides a Java SDK for developing MCP servers and clients. So, I strongly believe that next time, I’ll give developing my own MCP application a try.

If you enjoy reading the blog and find my content helpful or inspiring, consider buying me a coffee on Ko-fi. Your support helps me keep creating, writing, and sharing valuable content for free. Every little bit means a lot — thank you! 💙