Hands-on lab for Redpanda, part-1: installing & a smoke test

This is a series of hands-on labs where you will get first-hand experience using Redpanda from scratch. Each hands-on lab provides step-by-step procedures to complete specific tasks, such as installing and developing data-streaming applications using Redpanda.

Probably, immediately a question comes to mind: why do you need an alternative when there is a de facto standard like Apache Kafka? Apache Kafka is fine, but I need a much more lightweight implementation with a multi-tiered storage solution for replicating data into a data lake.

Let's start with my use case. I am involved in a project where we have to stream data in near real-time from more than 140+ applications to our data lake. The requirement's are as follows:

Data replication must use CDC, or logical replication.

The design principle should be based on a data mesh architecture where each domain team is involved in data replication and data analysis on their own.

The streaming data platform should be lightweight and support multi-tiered storage.

After a little research, the architecture of Redpanda caught my attention, especially it's multi-tiered object storage solution and lightweight Kafka-compatible C++ implementation. After reading the article "How Redpanda works", I decided to give it a try.

In the very first part of this series, I am going to install Redpanda on my home lab server and prepare two simple applications, Producer and Consumer, to play with Redpanda. Here are the pre-requisites:

Docker compose.

Java 17

Maven 3.6.0 or later

Step 1: preparation for installation.

I am going to use a single Redpanda broker, so copy and paste the following YAML content into a file named docker-compose.yml on your local file system or on the Docker host machine.

version: "3.7"

name: redpanda-quickstart

networks:

redpanda_network:

driver: bridge

volumes:

redpanda-0: null

services:

redpanda-0:

command:

- redpanda

- start

- --kafka-addr internal://0.0.0.0:9092,external://0.0.0.0:19092

# Address the broker advertises to clients that connect to the Kafka API.

# Use the internal addresses to connect to the Redpanda brokers'

# from inside the same Docker network.

# Use the external addresses to connect to the Redpanda brokers'

# from outside the Docker network.

- --advertise-kafka-addr internal://redpanda-0:9092,external://localhost:19092

- --pandaproxy-addr internal://0.0.0.0:8082,external://0.0.0.0:18082

# Address the broker advertises to clients that connect to the HTTP Proxy.

- --advertise-pandaproxy-addr internal://redpanda-0:8082,external://localhost:18082

- --schema-registry-addr internal://0.0.0.0:8081,external://0.0.0.0:18081

# Redpanda brokers use the RPC API to communicate with each other internally.

- --rpc-addr redpanda-0:33145

- --advertise-rpc-addr redpanda-0:33145

# Tells Seastar (the framework Redpanda uses under the hood) to use 1 core on the system.

- --smp 1

# The amount of memory to make available to Redpanda.

- --memory 1G

# Mode dev-container uses well-known configuration properties for development in containers.

- --mode dev-container

# enable logs for debugging.

- --default-log-level=debug

image: docker.redpanda.com/redpandadata/redpanda:v23.3.5

container_name: redpanda-0

volumes:

- redpanda-0:/var/lib/redpanda/data

networks:

- redpanda_network

ports:

- 18081:18081

- 18082:18082

- 19092:19092

- 19644:9644

console:

container_name: redpanda-console

image: docker.redpanda.com/redpandadata/console:v2.4.3

networks:

- redpanda_network

entrypoint: /bin/sh

command: -c 'echo "$$CONSOLE_CONFIG_FILE" > /tmp/config.yml; /app/console'

environment:

CONFIG_FILEPATH: /tmp/config.yml

CONSOLE_CONFIG_FILE: |

kafka:

brokers: ["redpanda-0:9092"]

schemaRegistry:

enabled: true

urls: ["http://redpanda-0:8081"]

redpanda:

adminApi:

enabled: true

urls: ["http://redpanda-0:9644"]

ports:

- 8080:8080

depends_on:

- redpanda-0If you are using Docker locally, you haven't changed anything in the Docker compose file. In the case of using Docker on a remote machine, you have to change the address of the Docker advertise external parameter (advertise-kafka-addr), which is used for client connections from outside of the Docker host machine.

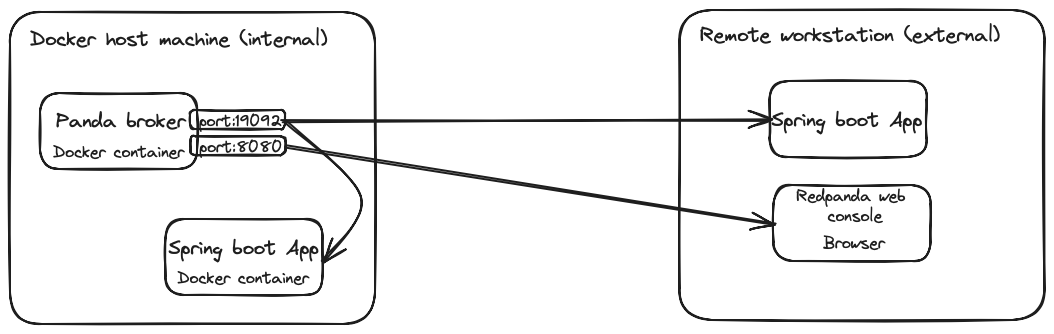

- --advertise-kafka-addr internal://redpanda:9092,external://YOUR_REMOTE_MACHINE_IP:19092 The schematic version of the topology is shown in the next figure.

However, you can also change the following two parameters to increase the productivity of the Redpanda:

# Tells Seastar (the framework Redpanda uses under the hood) to use 1 core on the system.

- --smp 1

# The amount of memory to make available to Redpanda.

- --memory 1GRun the following command in the directory where you saved the docker-compose.yml file.

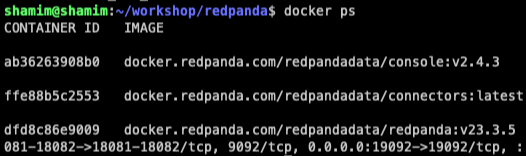

docker compose up -d #deatchedThe above command will start three Docker containers, as shown below:

Step 2: create a topic with Redpanda web console.

To create a topic, I am going to use the Redpanda user friendly web console. Web console usually available at http://IP_Address_Docker_Host_Machine:8080 as configured into the docker-compose.yml file.

Goto the menu bar located ring on the page and click on topics, then create topic button.

set topic name as "demo-topic"

set partition 3 and click create button

The Redpanda will create a topic named "demo-topic" with 3 partitions.

Step 3: developing producer and consumer.

Download the project repository from the girthub https://github.com/srecon/datastreaming-redpanda

The quick-start project contains two boilerplate spring boot application: Producer and Consumer, where

producer - produce greeting messages every 2 seconds.

consumer - consumes the greeting message and output to the console.

These two applications uses Spring Kafka template to produce and consumes message to and from the topic.

Change the application.properties file for the two above sub projects. You should find the file on /src/main/resources folder.

Add the IP address of your docker Host machine into the file.

spring.kafka.bootstrap-servers=//IP_ADDRESS_DOCKER_HOST:19092To try out the build, issue the following at the command line.

mvn clean compileIf everything goes well, you are ready to start the applications.

Step 4: start the producer application.

Run the following command from the folder "redpanda-producer":

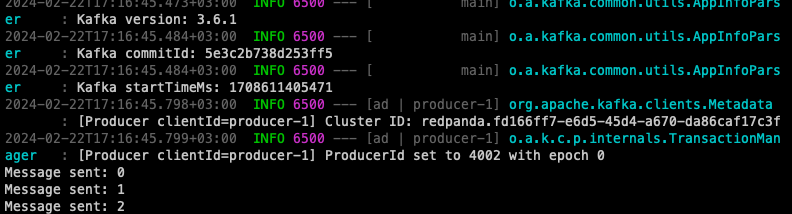

java -jar ./target/redpanda-producer-0.0.1-SNAPSHOT.jar testYou should see a lot of logs into the terminal. At the end of the log, you should find something like this.

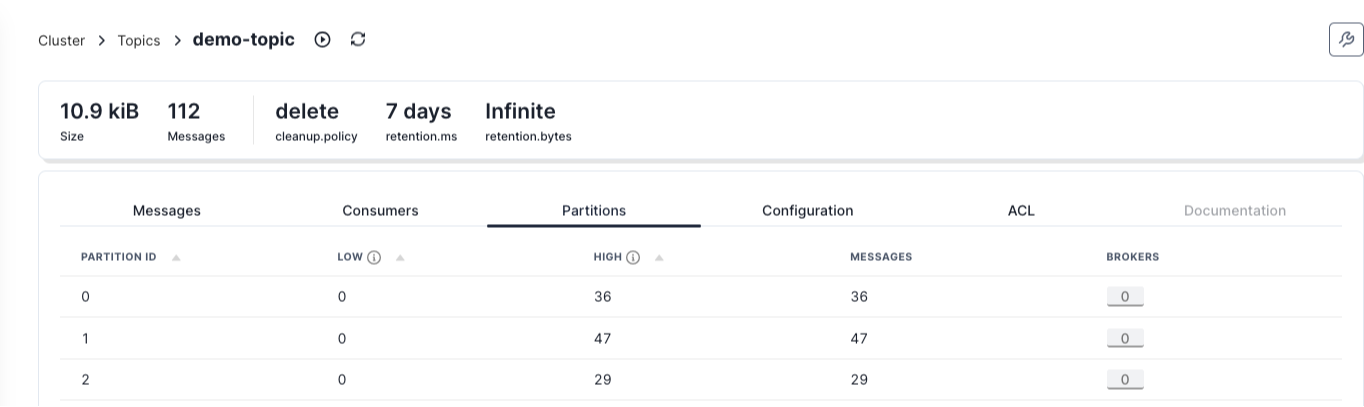

The spring boot producer connect through the tcp socket to the Redpanda broker and start sending messages into the topic named "demo-topic". If you open the Redpanda web console and monitor the topic "demo-topic", you should see all the three partitions with messages.

Through web console, you can also see all the message payload and the metadata itself.

Let's run our consumer.

Step 5: start the consumer application.

In an another terminal, open the folder redpanda-consumer and run the following command.

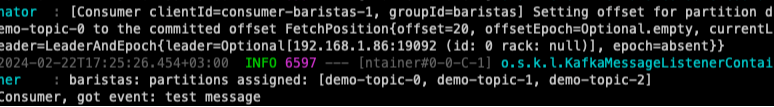

java -jar ./target/redpanda-consumer-0.0.1-SNAPSHOT.jarThe application should start immediately and start consuming messages from the topic.

The consumer client connects to the broker and consuming event's from the topic. In these two examples, we uses only spring kafka implementation to work with Redpanda.

In the next part of these series, we will step by step explorer other features of the Redpanda.